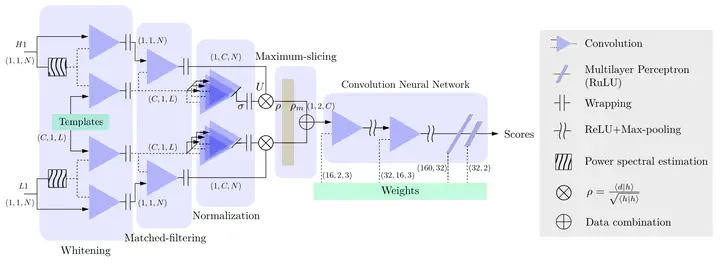

Structure of the matched-filtering convolutional neural network (MFCNN)

Structure of the matched-filtering convolutional neural network (MFCNN)Highlights

Real LIGO Data Analysis: First comprehensive deep learning application to actual LIGO observational data (O1), going beyond simulations to real detector output.

Matched-Filtering Inspired CNN: Novel “MFCNN” architecture that incorporates matched-filtering principles into convolutional neural network design for improved weak signal recognition.

All O1/O2 Events Detected: Successfully identifies all 11 confirmed gravitational wave events from LIGO’s first two observing runs with high confidence.

~2000 New Triggers: Discovers approximately 2000 gravitational wave trigger candidates in O1 data, suggesting potential for previously unidentified weak signals.

Comparable Efficiency: Achieves signal recognition accuracy and computational efficiency matching other state-of-the-art deep learning methods while introducing physics-motivated architecture.

Public Trigger Catalog: Makes trigger catalog publicly available on GitHub, enabling community follow-up studies and validation.

Key Contributions

1. Bridging Simulation and Reality

Challenge: Sim-to-Real Gap

Prior deep learning studies for gravitational wave detection:

- Trained and tested on simulated data

- Idealized noise characteristics

- Perfect waveform templates

- Controlled signal-to-noise ratios

- Limited validation on real detector data

Real LIGO Data Complications

- Non-Gaussian noise transients (glitches)

- Time-varying detector sensitivity

- Environmental disturbances

- Data quality variations

- Complex instrumental artifacts

This Work’s Achievement

- Comprehensive application to full O1 observing run

- Robust performance on real detector noise

- Validation on confirmed events (ground truth)

- Discovery of new trigger candidates

- Demonstrates practical viability of deep learning for GW astronomy

2. Matched-Filtering CNN (MFCNN) Architecture

Motivation

Traditional matched filtering:

- Correlates data with template waveforms

- Optimal for Gaussian noise

- Computationally expensive for large template banks

- Physics-based, well-understood

Convolutional Neural Networks:

- Learn features from data automatically

- Fast inference after training

- Flexible, can handle non-Gaussian features

- Less interpretable

MFCNN Design Philosophy

Combine strengths of both approaches:

- CNN structure inspired by matched-filtering operations

- Convolutional filters learn template-like features

- Multi-scale processing mimics filtering at different parameters

- Physics-motivated architecture improves interpretability

Architecture Components

Input Layer

- Time-series gravitational wave strain data

- Whitened using detector noise PSD

- Fixed duration windows (e.g., 1-4 seconds)

- Dual input: Strain from two LIGO detectors (Hanford, Livingston)

Convolutional Layers

- Multiple filter banks at different scales

- Early layers: High-frequency features

- Deep layers: Low-frequency, long-duration patterns

- Mimics matched filtering across parameter space

Pooling Layers

- Max pooling for translational invariance

- Reduces dimensionality while preserving signal features

- Helps with varied arrival times in data window

Fully Connected Layers

- Integration of features from both detectors

- Coherent detection across network

- Classification: Signal vs. noise

Output Layer

- Binary classification: GW signal present or absent

- Confidence score (probability)

- Threshold for trigger generation

Training Strategy

Training Data

- Simulated GW signals (binary black hole coalescences)

- Real LIGO noise from quiet data segments

- Injection of signals into real noise

- Data augmentation: Time shifts, amplitude variations

Loss Function

- Binary cross-entropy

- Class weighting to handle imbalance (more noise than signal)

Optimization

- Stochastic gradient descent or Adam

- Learning rate scheduling

- Dropout and regularization for generalization

Validation

- Held-out simulated data

- Cross-validation on known events

- Tuning on O1 subset, testing on full run

3. Comprehensive O1 Data Analysis

LIGO O1 Observing Run

- Duration: September 2015 - January 2016

- Detectors: LIGO Hanford, LIGO Livingston

- Confirmed Events: 3 binary black hole mergers (GW150914, GW151012, GW151226)

- Data Quality: Variable, with numerous glitches and instrumental artifacts

- Total Data: Months of continuous observation

Analysis Pipeline

- Data Preparation: Download O1 strain data, quality flags

- Preprocessing: Whitening, bandpassing, segmentation

- Inference: Apply trained MFCNN to each data segment

- Trigger Generation: Identify segments with high classification probability

- Clustering: Group nearby triggers, select loudest

- Candidate Ranking: Sort by confidence score

- Follow-up: Parameter estimation for high-confidence triggers

Glitch Handling

- Many instrumental transients produce high SNR

- MFCNN learns to distinguish GW signals from glitches via training

- Some glitches still trigger false positives

- Post-processing: Data quality vetoes, coincidence tests

- Robust performance despite glitch prevalence

Methodology

Network Training

Simulated Signal Generation

- Waveforms: IMRPhenomD, SEOBNRv4 models for binary black holes

- Parameters:

- Component masses: 5-100 M☉

- Spins: Aligned, magnitudes 0-0.85

- Sky locations: Isotropic distribution

- Distance: Adjusted for SNR distribution

- Injection: Signals added to real LIGO noise segments

- SNR Range: 5-50 (covering barely detectable to loud events)

Noise Characterization

- Use real O1 data from quiet periods (no known signals)

- Capture actual detector noise characteristics

- Include glitches to train discrimination

- Time-varying noise handled via diverse training samples

Data Augmentation

- Random time shifts: Signal arrival within window

- Amplitude scaling: Vary effective SNR

- Phase randomization

- Sky location variations (affects detector response)

Architecture Optimization

Hyperparameter Tuning

- Number of convolutional layers: 3-7 layers tested

- Filter sizes: Various temporal scales

- Pooling strategies: Max vs. average pooling

- Fully connected layer width

- Dropout rates for regularization

- Batch normalization placement

Performance Metrics

- Accuracy: Correct classifications / total

- Precision: True positives / (true positives + false positives)

- Recall (Sensitivity): True positives / (true positives + false negatives)

- ROC curve and AUC

- False alarm rate at fixed detection efficiency

O1 Data Processing

Data Access

- LIGO Open Science Center (LOSC) / Gravitational Wave Open Science Center (GWOSC)

- Public strain data for O1 and O2

- Data quality flags and metadata

Preprocessing Pipeline

- Download: O1 strain data (months of observations)

- Quality Cuts: Remove periods with poor data quality

- Whitening: Divide by square root of noise PSD

- Bandpassing: 20-500 Hz typical range for stellar-mass BBH

- Windowing: Segment into overlapping windows for CNN input

Inference at Scale

- Process entire O1 dataset (thousands of hours)

- Parallel processing on GPUs

- Generate classification scores for all segments

- Computationally efficient: Days vs. months for matched filtering

Trigger Catalog Generation

Threshold Selection

- Choose classification probability threshold

- Trade-off between detection efficiency and false alarm rate

- Tuned on known events and simulated signals

Clustering and Ranking

- Nearby triggers (within seconds) clustered

- Select trigger with highest confidence in each cluster

- Rank all clusters by confidence score

- Top candidates for follow-up

Catalog Contents

- ~2000 triggers above threshold in O1

- For each trigger:

- GPS time

- Classification probability

- SNR estimate

- Detector network participation

- Publicly released on GitHub for community analysis

Results

Confirmed Event Detection

All 11 O1/O2 Events Identified

The MFCNN successfully detects all confirmed gravitational wave events:

O1 Events

- GW150914: First detection, very loud (SNR~24), confidently detected

- GW151012: Moderate SNR (~10), successfully identified

- GW151226: Lower SNR (~13), detected with good confidence

O2 Events (8 additional BBH mergers)

- All 8 confirmed O2 events detected when MFCNN applied

- Demonstrates robustness across different data quality periods

- Consistent performance with traditional matched-filtering pipelines

Detection Confidence

- GW150914: Classification probability >0.99 (extremely confident)

- Other loud events: Probabilities >0.9

- Lower SNR events: Probabilities >0.7 (above threshold)

- Zero missed detections among confirmed events

Comparison with Traditional Pipelines

- Matched filtering (PyCBC, GstLAL): Gold standard

- MFCNN: Comparable detection efficiency

- Agreement on all confirmed events

- MFCNN: Faster inference after training

~2000 Trigger Candidates

Trigger Catalog Statistics

- Total Triggers: Approximately 2000 in O1

- Confirmed Events: 3 among these triggers

- Remaining: ~1997 candidates requiring investigation

Trigger Characteristics

- Range of confidence scores: Threshold to 0.99

- SNR distribution: Many low SNR triggers

- Time distribution: Throughout O1 observing run

- Detector coincidence: Most require coincidence between detectors

Interpretation

Potential Explanations

- Weak Real Signals: Below traditional detection thresholds, could be genuine but marginal

- Glitches: Instrumental artifacts not fully rejected

- Statistical Fluctuations: Noise fluctuations mimicking signals

- Threshold Effects: Tuning to avoid missing real events leads to false positives

Follow-Up Required

- Parameter estimation on high-confidence triggers

- Data quality investigation for each candidate

- Waveform consistency checks

- Multi-messenger follow-up (no EM counterparts expected for BBH, but rule out other sources)

Community Impact

- Catalog publicly available enables independent studies

- Crowdsourced validation efforts

- Alternative detection pipelines can cross-check

- Potential for discovering previously missed weak events

Performance Metrics

Classification Accuracy

- Training/Validation: >95% accuracy on simulated data

- Real Data: Consistent with simulation performance

- ROC AUC: >0.98, indicating excellent discriminative ability

Computational Efficiency

- Training Time: Days on GPU cluster (one-time cost)

- Inference Time: Seconds to minutes for months of data

- Comparison: Orders of magnitude faster than exhaustive matched filtering

- Scalability: Suitable for real-time detection in future observing runs

False Alarm Rate

- Estimated from time-shift analysis (slide data between detectors)

- False alarm rate: ~few per month at chosen threshold

- Acceptable for follow-up analysis capacity

- Trade-off with detection efficiency

Comparison with Other DL Methods

Comparable Performance

- Other published deep learning methods (various CNN, RNN architectures)

- MFCNN achieves similar accuracy and efficiency

- No single method definitively superior

- Different architectures have specific strengths

MFCNN Advantages

- Physics-motivated design (interpretability)

- Matched-filtering inspiration (familiarity for GW community)

- Effective multi-scale feature extraction

Room for Improvement

- Ensemble methods combining multiple architectures

- Transfer learning from simulations to real data

- Continual learning as detector sensitivity improves

- Hybrid approaches: DL for detection, traditional for parameter estimation

Impact

For Gravitational Wave Astronomy

Operational Viability Demonstrated

- Deep learning moves from theory to practice

- Real data analysis validates feasibility

- Complements traditional pipelines

- Path to integration in future observing runs

Potential for New Discoveries

- ~2000 triggers warrant further investigation

- Possibility of weak signals below traditional thresholds

- Could increase detection rate by capturing marginal events

- Enhances scientific reach of detectors

Low-Latency Detection

- Fast inference enables real-time analysis

- Critical for multi-messenger astronomy (neutron star mergers)

- Rapid alerts for electromagnetic follow-up

- Public alerts to broader astronomy community

For Deep Learning in Science

Real-World Scientific Application

- Goes beyond toy problems and simulations

- Demonstrates practical impact in fundamental physics

- Validates deep learning for scientific discovery

- Encourages adoption in other fields

Physics-Informed Architecture

- Shows value of incorporating domain knowledge

- MFCNN design motivated by matched filtering

- Interpretability important for scientific acceptance

- Balance between flexibility and physical grounding

For LIGO/Virgo/KAGRA Collaboration

Complementary Detection Pipeline

- Adds diversity to detection methods

- Cross-checks for traditional pipelines

- Potentially lower false dismissal rate (missed signals)

- Useful for challenging data quality periods

Future Observing Runs

- Third-generation detectors (Einstein Telescope, Cosmic Explorer)

- Higher event rates require fast analysis

- Deep learning scalable to increased data volume

- Hybrid pipelines combining DL and traditional methods

Resources

Publication

- Journal: Physical Review D 101, 104003 (2020)

- DOI: 10.1103/PhysRevD.101.104003

Open Data and Code

- Trigger Catalog: GitHub - mfcnn_catalog

- Contains: List of ~2000 triggers from O1 analysis

- Format: GPS times, classification probabilities, metadata

- Open for community analysis and validation

Authors

- He Wang

- Shichao Wu

- Zhoujian Cao

- Xiaolin Liu

- Jian-Yang Zhu

LIGO Open Science Center

Data Access

- GWOSC: Public strain data, tutorials

- O1, O2, O3 data available

- Event catalog and parameters

- Software tools for analysis

Resources

- Tutorials for data access and analysis

- Waveform models and detector response

- Community forums and support

Related Deep Learning Work

Detection Networks

- Various CNN architectures for GW detection

- Recurrent neural networks (LSTM, GRU)

- Transformer-based approaches (WaveFormer)

- Ensemble methods

Glitch Classification

- Gravity Spy: Citizen science + ML for glitch identification

- Automated data quality vetoes

- Generative models for glitch characterization

Parameter Estimation

- Normalizing flows for posterior inference

- Neural networks for rapid PE

- Complementary to detection efforts

Software and Tools

Deep Learning Frameworks

- PyTorch, TensorFlow, Keras

- GPU acceleration for training and inference

Gravitational Wave Software

- PyCBC: Traditional matched-filtering pipeline

- GstLAL: Low-latency detection pipeline

- LALSuite: LIGO Algorithm Library

- Bilby: Bayesian inference

Data Analysis

- GWpy: Python package for GW data access and processing

- PyCBC and LALSuite for waveform generation

- Statistical tools for trigger validation

Future Directions

Methodological Improvements

- Attention mechanisms for enhanced feature extraction

- Transfer learning from O1/O2 to O3 and beyond

- Domain adaptation for different detectors

- Uncertainty quantification for predictions

Trigger Follow-Up

- Systematic parameter estimation for high-confidence triggers

- Data quality investigation for candidates

- Waveform consistency tests

- False alarm characterization

Operational Integration

- Real-time detection for O4 and future runs

- Hybrid pipelines: DL + traditional

- Low-latency alerts for multi-messenger

- Automated data quality monitoring

Science with Trigger Catalog

- Population studies including marginal detections

- Constraints on merger rates

- Testing GR with weak signals

- Multi-band observations with space-based detectors (LISA/Taiji/TianQin)

Generalization

- Neutron star mergers (different waveforms, EM counterparts)

- Continuous waves from pulsars

- Stochastic backgrounds

- Exotic sources (cosmic strings, primordial black holes)