CS231n Resources

Image credit: Stanford University CS231n

Image credit: Stanford University CS231nTable of Contents

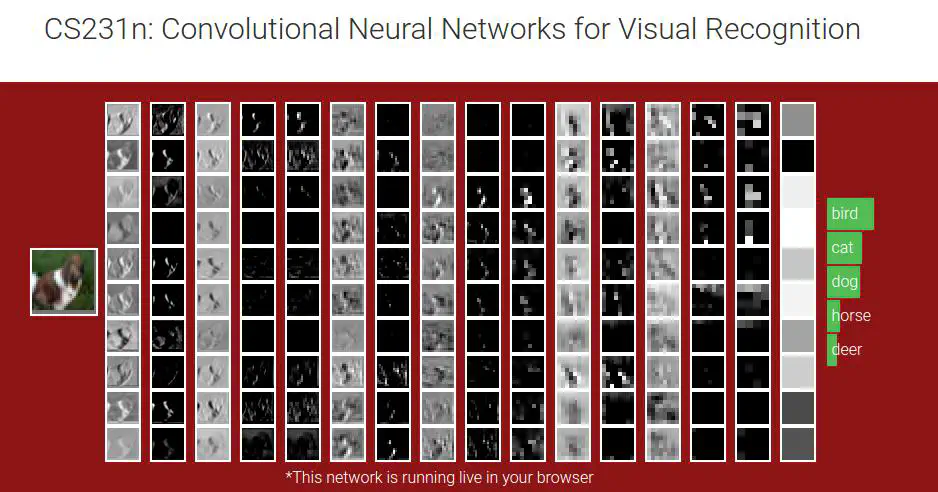

CS231n: Convolutional Neural Networks for Visual Recognition

Course Description

Computer Vision has become ubiquitous in our society, with applications in search, image understanding, apps, mapping, medicine, drones, and self-driving cars. Core to many of these applications are visual recognition tasks such as image classification, localization and detection. Recent developments in neural network (aka “deep learning”) approaches have greatly advanced the performance of these state-of-the-art visual recognition systems. This course is a deep dive into details of the deep learning architectures with a focus on learning end-to-end models for these tasks, particularly image classification. During the 10-week course, students will learn to implement, train and debug their own neural networks and gain a detailed understanding of cutting-edge research in computer vision. The final assignment will involve training a multi-million parameter convolutional neural network and applying it on the largest image classification dataset (ImageNet). We will focus on teaching how to set up the problem of image recognition, the learning algorithms (e.g. backpropagation), practical engineering tricks for training and fine-tuning the networks and guide the students through hands-on assignments and a final course project. Much of the background and materials of this course will be drawn from the ImageNet Challenge.

CS231n 课程的官方地址:http://cs231n.stanford.edu/index.html

Original Works

本文以斯坦福大学在 2017 年的 CS231n 课程中的作业 Assignment2 中的 Q1-Q3 部分代码作为例子,目标是由非常浅入深得搞清楚神经网络,同时也是以图片分类识别任务作为我们一步一步构建神经网络的目标。

(Original,30671字 + 多图)

My Learning Notes from Lecture Video and Slices

- By Song Han (Spring 2017)

- By Ian Goodfellow (Spring 2017)

Course Notes

L1/L2 distances, hyperparameter search, cross-validation

parameteric approach, bias trick, hinge loss, cross-entropy loss, L2 regularization, web demo

optimization landscapes, local search, learning rate, analytic/numerical gradient

chain rule interpretation, real-valued circuits, patterns in gradient flow

layers, spatial arrangement, layer patterns, layer sizing patterns, AlexNet/ZFNet/VGGNet case studies, computational considerations

model of a biological neuron, activation functions, neural net architecture, representational power

preprocessing, weight initialization, batch normalization, regularization (L2/dropout), loss functions

gradient checks, sanity checks, babysitting the learning process, momentum (+nesterov), second-order methods, Adagrad/RMSprop, hyperparameter optimization, model ensembles

From: Andrej Karpathy blog’s 《The Unreasonable Effectiveness of Recurrent Neural Networks (2015)》

Memo to myself

- 根据英文字幕更新 Spring 2020 视频内的课程内容

- 完善和更新所有提及的文献 paper

- 尽可能将图片化内容信息改写为文本 markdown

- 需要细致,完整的给出插图以及 Slide 等来源或作者信息。